Create own GitLab Runner

Before register runner on our server, ensure the server has installed GitLab Runner and/or docker.

Checking the service status:

$ systemctl status gitlab-runner

$ systemctl status docker

Once server is setup, we can register our new runner. We will need URL and token from Settings > CI/CD > Runners.

$ gitlab-runner register

Enter the GitLab instance URL (for example, https://gitlab.com/):

[[ our URL from Settings > CI/CD > Runners ]]

Enter the registration token:

[[ our token from Settings > CI/CD > Runners ]]

Enter a description for the runner:

[[ your runner description ]]

Enter tags for the runner (comma-separated):

[[ later we can setup jobs to only use runners with this specific tags ]]

Enter an executor: ssh, docker+machine, kubernetes, custom, docker-ssh, parallels, docker-ssh+machine, docker, shell, virtualbox:

[[ scenarios to run our builds, see https://docs.gitlab.com/runner/executors/ ]]

Enter the default Docker image (for example, ruby:2.6):

[[ your default docker image ]]

Runner registered successfully. Feel free to start it, but if it's running already the config should be automatically reloaded!

Next, start the runner

gitlab-runner start

Runner will show up on Settings > CI/CD > Runners in GitLab.

Basic .gitlab-ci.yml

# defining our global docker image

image: node

# defining our stages

stages:

- build

- test

- deploy

- deployment tests

# defining our job

build website:

stage: build

script:

- npm install

- npm install -g gatsby-cli

- gatsby build

# data pass between stages/jobs, usually for output of a build tool

artifacts:

paths:

- ./public

# defining our job

test artifact:

# local docker image

image: alpine

stage: test

script:

- grep "Gatsby" ./public/index.HTML

test website:

# second job of stage test so will run parallel

stage: test

script:

- npm install

- npm install -g gatsby-cli

- gatsby serve & # ampersand indicates running on the background as a daemon and execute the next command

- sleep 10 # waiting for development server ready

- curl "http://localhost:9000" | tac | tac | grep -q "Gatsby" # https://stackoverflow.com/questions/16703647/why-does-curl-return-error-23-failed-writing-body

deploy to surge:

stage: deploy

script:

- npm install --global surge

- surge --project ./public --domain instazone-dindas.surge.sh

test deployment:

image: alpine

stage: deployment tests

script:

- apk add --no-cache curl # curl is not installed by default on the alpine image

- curl -s "https://instazone-dindas.surge.sh" | grep -q "Hi people"

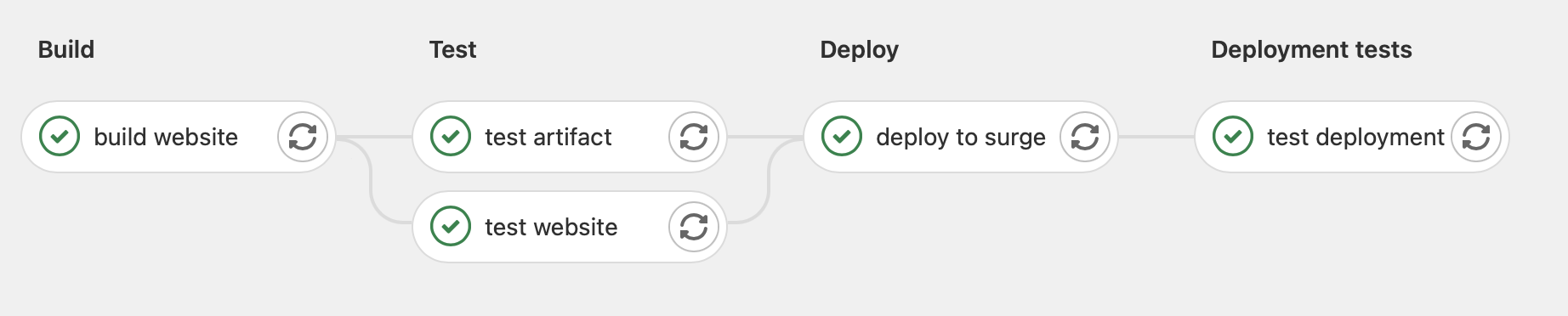

Our pipeline will be:

Environment Variables

- Managing own variable with

Settings > CI/CD > Variables. These variables will be injected by GitLab and will be available in the runner. - GitLab has predefined environment variables see this reference. For example,

$CI_COMMIT_SHORT_SHAto the first eight characters of our commit revision. - We also can define inside YAML configuration file, then add to environments. The custom variables then can be tracked in

Operations > Environments

variables:

DEV_DOMAIN: localhost:9000

PRODUCTION_DOMAIN: instazone-dindas.surge.sh

test website:

stage: test

environment:

name: dev

URL: http://$DEV_DOMAIN

Artifacts vs Cache

In GitLab, every job is starting in a clean environment. We can use artifacts or cache to avoid GitLab download those all the time on every jobs on every pipelines.

Artifacts

- Use to avoid work duplication in jobs.

- Data pass between stages/jobs of a single pipeline, usually for output of a build tool.

- Use

dependencies: []inside the jobs that doesn't need any artifacts.

Cache

- Use cache to hold some files, which will be needed later.

- Data pass between subsequent runs of the same job, usually used for external project dependency, like node_modules.

- Can be defined globally (shared cache) or locally.

- If defined globally, we can use

cache: {}inside the jobs that we don't want to use cache. - Cache identify by key.

Cache to optimize build speed

- The default cache behavior in GitLab CI is to download the files at the start of the job execution (pull) and to re-upload them at the end (push). The chance of an npm dependency changing during the pipeline's execution is very small, so we can amend the global cache configuration by specifying the pull policy

policy: pull. - To push a cache, we can use scheduled, for example, to run only once per day to update the cache.

- Use

${CI_COMMIT_REF_SLUG}to the key make we have cache based on a specific branch. - Use

${CI_PROJECT_NAME}to the key make we have one cache for all branches. - Add

${CI_JOB_NAME}to the key if different jobs of your pipelines don't need to share the same cache.

Our YAML file will be:

# defining our global docker image

image: node

# defining our stages

stages:

- build

- test

- deploy

- deployment tests

- cache

# can be defined globally or locally

cache:

# gitlab need a key to identify cache

key: ${CI_COMMIT_REF_SLUG} # the branch or tag name for which project is built

paths:

- node_modules/

# just download the files (pull) at the start of the job execution

policy: pull

variables:

DEV_DOMAIN: localhost:9000

PRODUCTION_DOMAIN: instazone-dindas.surge.sh

# job to uploading (push) the cache

update cache:

stage: cache

script:

- npm install

cache:

key: ${CI_COMMIT_REF_SLUG}

paths:

- node_modules/

policy: push

# make sure the job only runs when a schedule triggers the pipeline

only:

- schedules

# defining our job

build website:

stage: build

script:

- echo $CI_COMMIT_SHORT_SHA

- npm install

- npm install -g gatsby-cli

- gatsby build

# data pass between stages/jobs, usually for output of a build tool

artifacts:

paths:

- ./public

# make sure that it does not run when a schedule triggers the pipeline

except:

- schedules

# defining our job

test artifact:

# local docker image

image: alpine

stage: test

# disabling the cache for this job

cache: {}

script:

- grep "Gatsby" ./public/index.HTML

# make sure that it does not run when a schedule triggers the pipeline

except:

- schedules

test website:

# second job of stage test so will run parallel

stage: test

environment:

name: dev

URL: http://$DEV_DOMAIN

script:

- npm install

- npm install -g gatsby-cli

- gatsby serve & # ampersand indicates running on the background as a daemon and execute the next command

- sleep 10 # waiting for development server ready

- curl "http://$DEV_DOMAIN" | tac | tac | grep -q "Gatsby" # https://stackoverflow.com/questions/16703647/why-does-curl-return-error-23-failed-writing-body

# make sure that it does not run when a schedule triggers the pipeline

except:

- schedules

deploy to surge:

stage: deploy

# disabling the cache for this job

cache: {}

environment:

name: staging

URL:

script:

- npm install --global surge

- surge --project ./public --domain $PRODUCTION_DOMAIN

# make sure that it does not run when a schedule triggers the pipeline

except:

- schedules

test deployment:

image: alpine

stage: deployment tests

# disabling the cache for this job

cache: {}

script:

- apk add --no-cache curl # curl is not installed by default on the alpine image

- curl -s "https://$PRODUCTION_DOMAIN" | grep -q "Hi people"

# make sure that it does not run when a schedule triggers the pipeline

except:

- schedules

This new configuration improves our pipeline build time by around 2 minutes (07.53 to 05.32).

More

Job running only when manually triggering.

# The job only can run when manually triggering

when: manual

# block next job

allow_failure: false

Jobs running only on a specified branch.

only:

- master

Job running only on merge request.

only:

- merge request

Specifying job to run with specific tags runner.

# this job run by runner that has both ruby and postgrres tags

tags:

- ruby

- postgres

References:

GitLab CI: Pipelines, CI/CD and DevOps for Beginners

Setup Pipeline with GitLab CI on Ubuntu

Practical tips regarding build optimization for those who use Gitlab

More References:

Using Docker Compose on Shell Executor

Manage User Permission

For PHP Project